(Cloudy with a Chance of Science)

Authors: Jeff D’Ambrogia, Fengchen Liu, Tin Ho, Shawfeng Dong, Gary Jung

Published on January 7, 2022.

Credit: Starecat.com

In some respects, cloud computing has been around for so long that it seems old hat these days. Gone are the early days at computing conferences where almost every vendor had an obligatory slide introducing acronyms such as IAAS, PAAS, and SAAS (Infrastructure/Platform/Software as a Service). Now it seems that everything has moved to the cloud. Or has it?

For scientific computing, the move to cloud has been happening at a much slower pace. Latency in transferring big data from on-premise experimental labs to the cloud; latency in multi-node communications affecting large scale simulation performance; and the high recurring costs of intensive cloud use all have been barriers to the significant adoption of cloud for science. However, there are many signs that change is finally underway in scientific computing and, although cloud computing will never completely replace on-premise scientific computing, it is becoming an important complement in pushing forward today’s research frontiers. In this article, we’ll provide some information about cloud computing at the Lab as we talk about some of the services that we provide to help scientists make use of the cloud to do science.

Why Use Cloud computing?

Simply defined, Cloud Computing delivers a wide variety of computing resources – data storage, computation, and networking to name just a few – to users in an on-demand, as-needed, and where-needed basis. Using a web-based UI or Infrastructure-as-Code deployment scripts, users simply provision from the cloud provider the “amount of computing and/or storage” they want without needing to invest in their own computing infrastructure, and the costs are based only on the resources used and how long they are used for.

As compared to traditional on-premise computing or self-managed systems, cloud computing can be more expensive when comparing overall costs of the two options. For many researchers, the choice is between the one-time cost of purchasing hardware which works better for some types of funding vs the recurring cost of using the cloud. So for example, researchers could do a one-time purchase of compute server hardware to add to the Lab’s Lawrencium Condo cluster that would provide computing for the service life of the hardware which is at least 5 yrs and be done with it. However, cloud computing can provide advantages over other systems with immediate and easy access to resources, cutting edge hardware and software features, potential infinite scalability in compute and storage, built-in security models, and it eliminates the need to purchase and manage your own server hardware equipment. These advantages can be a good justification for the additional cost of using cloud computing.

Commercial Cloud Available Now for Researchers and Staff

Since 2017 Science IT has maintained Master Payer contracts for both Amazon Web Services (AWS) and Google Cloud Platform (GCP) to make cloud computing available to all staff and researchers at LBL. This allows users to quickly get access to their desired provider of cloud services without having to put in their own requisition or make a separate procurement or even use their own credit card. Costs incurred by each user will be billed back to their project ID code (PID) monthly, one month in arrears.

Included in the master payer contracts are significant cost saving discounts as compared to existing published list pricing and also global data egress waivers that eliminate normal egress charges that are incurred by moving data out of the cloud. Generally speaking, as compared to the list pricing for services as published by AWS and GCP, under the master payer contract AWS costs are approximately 8-10% less than list pricing and GCP costs are 25% less than list pricing and both are better than academic institution discounts.

LBL’s Science IT Consulting team has close relationships with both cloud vendors and arranges technology training classes and office hours with each vendor to help our cloud users utilize the services they’re interested in using.

Cloud Usage and Growth at LBL

Usage of Cloud Computing services continues to grow year over year at LBL. As you can see from the chart below, the Lab’s cloud spending has more than quadrupled over the last 4 yrs and cloud spending in 2021 increased by 25% from 2020. Google GCP usage, which was nearly non-existent in 2017, now represents a significant portion of the cloud spend while AWS usage has dropped slightly in the last two years as a few of the very large cloud users have moved to GCP to take advantage of the larger discounts. Interesting notes. LBL is the largest user of GCP across the DOE Labs. Also IT Division’s Richard Gregory led the negotiations with Google to establish significant discounts for all the DOE Labs.

Amazon AWS vs Google GCP

Both Amazon and Google are behemoths in the cloud service space, and capabilities wise, they are more alike than they are different, albeit the name they use for each service differs. The table below provides a quick rosetta stone of who calls which service what.

| AWS | GCP | |

| Number of services | 175+ | 100+ |

| Compute | EC2 | Compute Engine VM |

| Object Storage | S3 | Storage |

| Application Server | ElasticBeanstalk | AppEngine |

| Database | RDS, DynamoDB, DocumentDB | SQL, BigTable, BigQuery |

| Containers | ECS, EKS | Kubernetes Engine |

| Serverless | Lambda | Cloud Functions |

| AI/ML | SageMaker, Rekognition | AutoML, AI Notebooks |

| VMware | VMware Cloud | VMware Engine |

| Security | IAM, KMS | IAM, Cloud KMS |

| Migration | CloudEndure | StratoZone |

Choosing AWS vs GCP is largely like choosing between Safeway vs Lucky when shopping for groceries. Much of it boils down to service offerings, pricing (with discounts and credits), and personal preference. There is a substantial inertia for changing providers, since you will likely have data stored in their cloud and there could be a cost involved in data egress (if not waived by the LBL master payer agreement). Tooling created with one service may not work out of the box with the other, and a lot of services contain different features and functionality as compared to the same services on the other provider. If you desire integration with Google Apps such as Google Docs, then that is one of the few obvious preferences to choose GCP. Additionally, our identity management services work natively in GCP making account authentication simple. On the other hand if you have collaborators who are in AWS, since they have been providing cloud service as we know it for a longer time than GCP, staying in their cloud could make things a bit easier for everyone.

For an independent comparison of GCP vs AWS, read this article by eWeek magazine here:

Cloud Consulting for Science

Although getting started with a simple cloud instance is straightforward, cloud providers offer myriad services and tools which can be overwhelming to the uninitiated. Knowing, for example, which Jupyter notebook-like tool to use – whether it be CoLab, or Vertex AI Notebooks or SageMaker Notebook – can take some investigation and some trial and error. For other researchers looking to scale up or put into production, there are hurdles to moving their workflow from their laptop to a cloud infrastructure. Data movement, use of specialized tools, and integration with on-prem resources all have to be worked out.

With these concerns in mind, the Science IT consulting team offers consulting to scientists looking to utilize the cloud for their research. Here are some examples of our recent consulting engagements.

Google Cloud Vertex AI: Sample Centering Using AutoML.Fully automated and unattended Macromolecular Crystallographic data collection relies on robust alignment of crystal samples within the X-ray beam. This step has historically been performed through a manual click-to- center strategy. AutoML (Google Cloud pre-trained APIs for image, tabular, text and video) enables researchers to train high-quality models with minimal ML expertise or effort, and the trained models can be deployed on GCP or downloaded and deployed on local compute resources. We worked with Dr. Scott Classen (ALS) to build an object-detection model using AutoML that automatically detects and classifies two types of loops: nylon loop and MiTeGen loop.

o

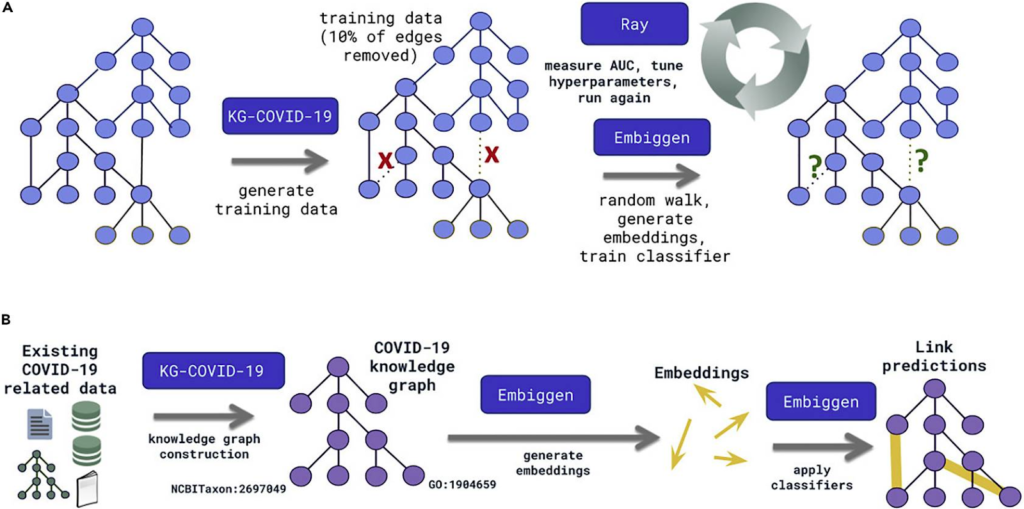

Google Cloud COVID-19 Research Grants program. We worked with Dr. Justin Reese (EGSB) to set up an on-demand computing environment on Google Cloud for his research on KG-COVID-19, a flexible framework that ingests and integrates heterogeneous biomedical data to produce knowledge graphs (KGs), and can be applied to create a KG for COVID-19 response. This KG framework also can be applied to other problems in which siloed biomedical data must be quickly integrated for different research applications, including future pandemics. For more detailed information about the KG-COVID-19 project, please see this article and repository.

AWS SageMaker: ML models for the simulated spectral data analysis. X-ray absorption spectroscopy (XAS) produces a wealth of information about the local structure of materials, but interpretation of spectra often relies on easily accessible trends and prior assumptions about the structure. Dr. Yang Ha (ALS) and his team have demonstrated that random forest models can automate this process to predict the coordinating environments of absorbing atoms from their XAS spectra. We worked with Dr. Yang Ha to continue his previous work and compare the performance of random forest models against that of deep learning models. For more detailed information about this project, please see this article and repository for random forest models, and this repository for deep learning models and performance comparison.

Automated Defect Identification in Electroluminescence Images of Solar Modules: we worked with Xin Chen, Todd Karin, Anubhav Jain of Energy Storage Area to deploy their automatic pipeline “PV-Vision” on Google Cloud Platform, in order to analyze electroluminescence images of solar modules and identify cell defects. Their tool is fast (~0.5s/module), accurate (the macro F1 score on the test dataset reaches 0.83), and open source. Application of this tool to a survey of 2.4 million cells exposed to fire identifies increased defect rates on affected cells.

How to get started

Getting answers about the LBL cloud program and getting access to it is easy — just email us at ScienceIT@lbl.gov and we’ll answer any questions you might have about the program, talk with you about how cloud computing can help your research, and guide you on which cloud provider might best fit your needs. From there we’ll work with you to get your cloud account setup and working, assist you in creating a monthly spending budget with alerts, and we’ll also see if there are any usage credits available to you as you start your cloud journey. You can also visit the IT web site for more specific details on the available discounts and features available from each cloud provider.

Additionally, the Science IT consulting team is available to you as needed to help guide your usage of cloud services, help with cloud architecture design, and provide tools and services to meet your training needs.