Authors: Wei Feinstein, Shawfeng Dong, Gary Jung

Contents

Overview of HPC Services at LBNL

– No Cost and Low-Cost Access to Lawrencium

– Storage Services on Lawrencium

– Data Transfer on Lawrencium

– Open OnDemand Web Portal Service

– New Addition of Nvidia A40 GPU Nodes to ES1 GPU Partition

– Coming Soon: The Next Generation of Lawrencium LR7 Expansion

– User Portal myLRC is under Active Development

We Also Manage the Savio Cluster at UC Berkeley

For almost 20 years, the IT Division’s Scientific Computing Group at Berkeley Lab, also known as HPCS (High-Performance Computing Services), has stood out as a national leader and pioneer in high-performance computing — essentially defining the midrange research computing segment in the DOE and contributing to the service model now widely used at academic institutions. Scientific Computing operates the Lawrencium supercluster and supports more than 1300 scientists actively working on 360+ research projects. By providing cutting-edge computing infrastructure, the group enables researchers to turn bits and bytes into groundbreaking scientific discoveries across a wide range of disciplines, including climate modeling, exploration of new advanced materials, simulations of the early universe, analysis of data from astrophysics sky surveys, carbon sequestration, geochemistry, and a host of other pioneering endeavors.

In this article, we’ll highlight Lawrencium HPC computing at the Lab and the services provided to simplify the usage of Lawrencium for scientific research. We will also learn more about the latest state-of-the-art hardware and the exciting new services to be made to Lab scientists.

We provide large scale HPC services on Lawrencium, so scientists can focus on their research

Lawrencium is a shared computational Linux cluster with over 1300 compute nodes (~ 30,000 computational cores) made available to all Berkeley Lab researchers and their collaborators. The system consists of shared nodes and PI contributed Condo nodes that are interconnected with a low-latency InfiniBand network and have access to over 2.5 petabytes of high-performance Lustre parallel filesystem storage. Multiple generations of CPUs, GPUs, large memory and AMD nodes are available to facilitate diversified computational workflows.

Using Lawrencium for computational needs provides many advantages over individual solutions. Purchasing and additional recurring costs are incurred due to required maintenance of equipment and utilization of data center facilities. Lawrencium allows researchers to avoid many of these costs while benefiting from the inherent potential for economies of scale. Additionally, researchers have access to a larger pool of professionally managed resources that individuals or departments could not procure without Lawrencium.

Lawrencium is also an excellent platform for researchers needing to develop or scale their codes to run at larger supercomputing centers. Lawrencium provides many of the same features and fast turnaround but it is on a smaller scale.

The Lab’s HPC services allow scientists to focus on their research rather than on their computing systems. On top of the powerful number of compute nodes, a dedicated data transfer node (DTN) and Globus are made available for fast data transfer and data sharing. New for this year is our Open OnDemand web portal-based HPC service that facilitates easy web browser based access to various interactive applications/tools which utilize the computing resources on the backend of Lawrencium.

No Cost and Low-Cost Access to Lawrencium

The Lab has made a significant investment in developing the Lawrencium cluster as a Condo Cluster program to grow and sustain midrange computing for Berkeley Lab. The Condo program provides Berkeley Lab researchers with state-of-the-art, professionally-administered computing systems and ancillary infrastructure. The intention is to improve grant competitiveness and to achieve economies of scale by utilizing centralized computing systems and data center facilities. The use of Lawrencium is offered on a three-tier cost structure ranging from free to low as follows:

- PI Compute Allowance (PCA) – Provides up to 300K Service Units (SUs) of free compute time per fiscal year to each qualified Berkeley Lab PIs. An SU is defined as using a single CPU core for an hour, meaning 300K SUs is equivalent to free 300K computation hours on a single CPU core. All PCA accounts are typically renewed in September each year but can also be renewed throughout the year upon individual requests.

- Lawrencium On-Demand (recharge) – Provides additional computing resources for less than $0.01 per SU on the latest hardware (e.g., LR6 partition). When using hardware from older generations, such as LR5 and below, the usage rate is further reduced. Recharge projects can be requested all year long and are billed on a monthly basis. The usage of the LR3 partition will be free of charge starting June 2022.

- Lawrencium Condo – Beside taking advantage of the Lab’s investment of the compute resources, PIs can buy into the cluster and purchase compute nodes to become condo owners. By buying into the cluster, their research groups are granted priority access to the number of nodes they purchase in the partition the PI bought into and also get access to all of the other partitions with low_prio QoS when the resources are not in use, thus giving them access to the whole breadth of Lawrencium resources.

Storage Services on Lawrencium

By default, each user on Lawrencium is entitled to a 20 GB home directory which receives regular backups; each user also has access to the large high-performance Lustre scratch parallel filesystem for working with non-persistent data, where a purge policy will be in place. As an added benefit, each PCA, recharge and condo research group receives up to 200 GB of project space to hold research specific application software shared among the group’s users. Beyond the standard storage service listed above, Scientific Computing offers a condo storage service to PIs and researchers which allows them to purchase extra storage disks to go into the Lawrencium’s storage infrastructure. The intention is to provide a cost-effective, persistent storage solution for users or research groups that need to import and store large data sets over a long period of time to support their use of Lawrencium or dedicated research clusters.

Data Transfer on Lawrencium

A dedicated data transfer node (DTN) helps improve the data transfer experience for supercluster users. This DTN lrc-xfer.lbl.gov sits on the LBLnet’s Science DMZ (Demilitarized Zone) to facilitate optimized data transfers between Lawrencium and other systems or institutions. Free Globus Online services are also available to the Lab researchers enabling unattended, fast, and secure data transfer and sharing. Researchers can also use applications that leverage the features and capabilities provided by Globus.

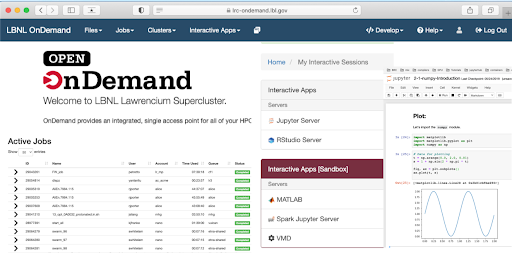

Open OnDemand Web Portal Service

Open OnDemand is a web portal platform that provides convenient access to Lawrencium clusters and file systems with a minimal knowledge of Linux and scheduler commands. It supports researchers to view and edit files; upload and download files; submit and monitor jobs, and connect via SSH, all through a web browser. Users can launch interactive GUI applications on compute nodes within the platform, which can be challenging on a typical HPC cluster.

The interactive applications supported at the Lab include Jupyter notebooks, RStudio and MatLab, all of which are available through the browser-based service at lrc-ondemand.lbl.gov. The front-end interface can be run on Windows, Mac, and Linux operating systems. Lawrencium’s compute nodes provide the backend computing resources for any computation and visualization, allowing even the most modest of personal computers and laptops to use this service.

Lastly, the newly added Interactive Desktop application allows researchers to launch the Xfce Desktop Environment on a compute node from Lawrencium. Through a web browser using the noVNC client, users are then able to launch and connect to a running session, such as ParaView, VMD and spyder IDE, just to name a few. Active development is ongoing to improve interactivity and user experience.

New Addition of Nvidia A40 GPU Nodes to ES1 GPU Partition

Deep Learning has completely transformed the landscape of research computing. The insatiable demand for FLOPS and IOPS has become the dominant force that continues to drive the exponential growth of HPC capacities. To meet this challenge head-on, the ScientificComputing is adding 18 GPU nodes to the ES1 partition. Each GPU node is armed with 4 ea. Nvidia A40 GPUs and 512GB of memory. When these nodes come online in early Summer 2022, the GPU computing power of Lawrencium will be more than doubled.

Coming Soon: The Next Generation of Lawrencium LR7 Expansion

Since the mission of the Science IT is to provide state of the art computing and storage solutions to Berkeley Lab researchers so as to enable groundbreaking scientific discoveries, the capabilities of Lawrencium are constantly expanded through addition of the latest hardware. Just recently, the Lab’s main data center which hosts Lawrencium was upgraded in order to increase the necessary power and cooling to handle today’s high density HPC systems. One exciting addition to Lawrencium will be the LR7 expansion, which is slated to be deployed later this year in Fall 2022. The LR7 expansion will consist of 92 Dell PowerEdge C6520 servers, each of which is equipped with two next-gen 28-core Intel Xeon Gold 6330 processors (5152 cores) and 256 GB of memory. All LR7 compute nodes will be interconnected with the blazingly fast Mellanox HDR InfiniBand fabric at 100Gbps. The LR7 expansion will further boost the computing capacity of Lawrencium, and hopefully the expansion will significantly reduce the time to scientific breakthroughs.

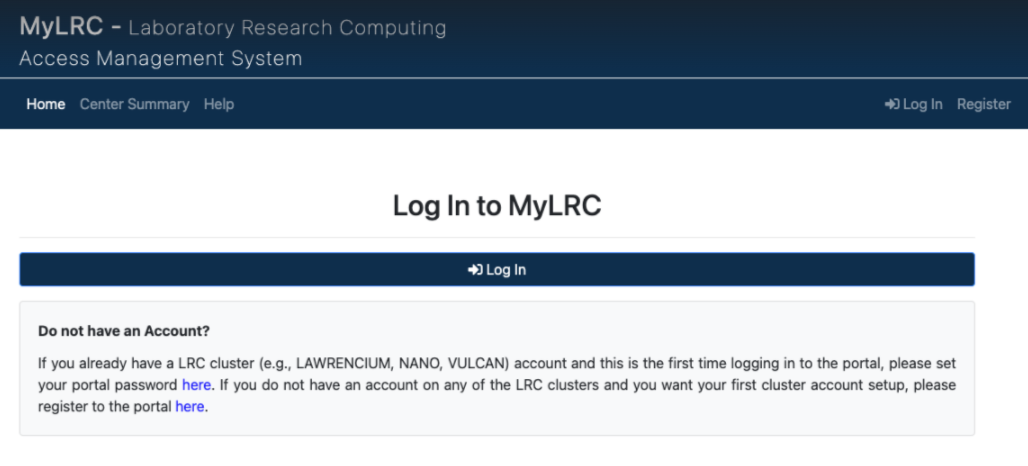

User Portal myLRC is under Active Development

In order to automate the management of the Lawrencium cluster user accounts, projects, allocations, billing reports, and Project ID validation, Scientific Computing is actively developing a new user-friendly platform through a web portal called myLRC. myLRC will replace the current Google Forms with Postgresql database to capture users’ input and interface with job scheduler Slurm and account databases to automate Lawrencium resource management. The portal will use CILogon for user authentication. Lab PIs and researchers will be able to submit and monitor account and project related requests on the portal, manage group access by adding or removing users from projects, check their PCA’s remaining SUs, view details on service units used by individual project members, and check job status.

We Also Manage the Savio Cluster at UC Berkeley

Parallel to managing Lawrencium at the Berkeley Lab, the Scientific Computing Group also manages the high-performance computing service for the UC Berkeley Research Computing program. This service, offering and supporting access to UC Berkeley’s Savio Institutional/Condo Cluster, is designed to provide a similar environment and more to campus researchers given that many Lab scientists have dual appointments or collaborations with campus departments. Please contact the Berkeley Lab Scientific Computing Group at hpcshelp@lbl.gov for questions and comments.

Related articles:

- Science IT Hosts HPC Technology Exchange at Berkeley Lab

- Science IT and JGI Partner to create Dori

- Intern uses ML to solve photo archive issue

Read more ScienceIT News.