By Erica Yee • August 28, 2018

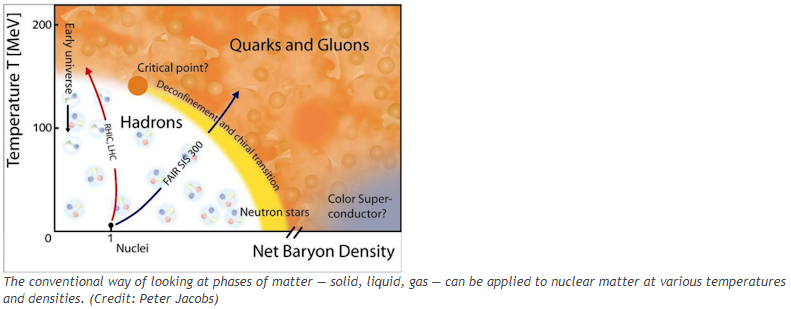

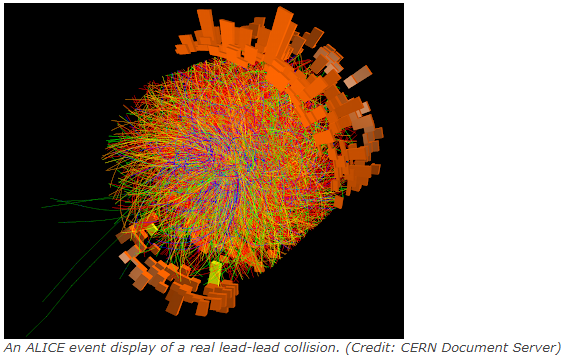

Scientists can’t go back in time to witness the origin of the universe, so they do the next best thing: recreate the conditions of the early universe in the laboratory. A few microseconds after the Big Bang, the universe was filled with hot, dense matter called the quark-gluon plasma (QGP). As the universe cooled, the quarks and gluons coalesced into protons and neutrons — the subatomic particles contained in the nuclei of atoms, which make up all ordinary matter in today’s universe. Scientists study this transition by colliding heavy nuclei at the CERN’s Large Hadron Collider (LHC). These collisions generate temperatures more than 100,000 times hotter than the center of the sun, causing the protons and neutrons in these nuclei to “melt” and create QGP.

“Studying the quark-gluon plasma is similar to studying any new material created in the laboratory. Understanding of the structure and behavior of the QGP comes from an array of different measurements which need to be understood together in a consistent picture,” said Peter Jacobs of Berkeley Lab’s Nuclear Science Division. “ALICE is uniquely suited for that scientific program.”

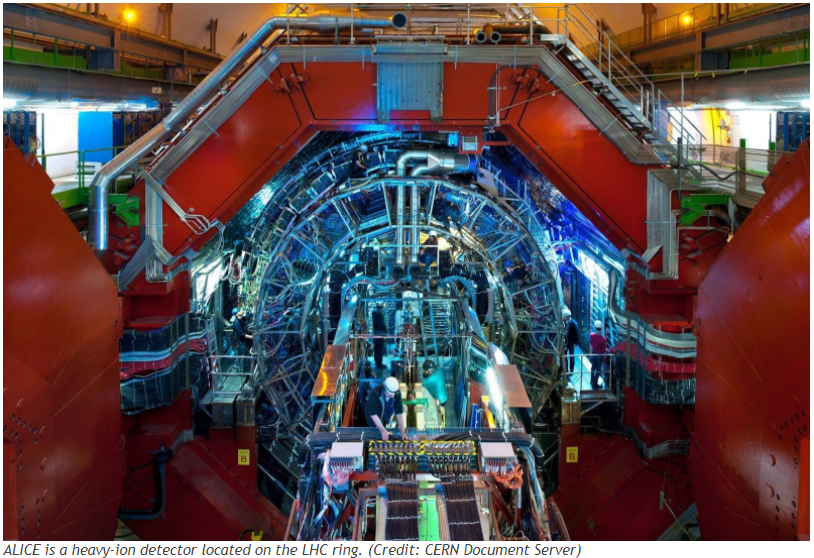

ALICE (A Large Ion Collider Experiment) is one of the large detectors in the LHC ring, designed to investigate and understand the properties of the QGP. There are researchers from 37 different countries participating in the ALICE collaboration, and each participating country must provide computing resources to store and analyze the copious data the experiment produces. Under Berkeley Lab’s Science IT Department, the Scientific Computing Group (SCG) has recently established a new ALICE site on the Worldwide LHC Computing Grid to provide a significant amount of ALICE computing and data storage.

During the 2018 LHC running period, the ALICE experiment will collect data from more than 600 billion independent lead-lead collisions and 400 billion proton-proton collisions, representing more than 20 petabytes (PB) of raw data. To put that into perspective, a typical DVD can hold 4.7 gigabytes (GB) of data. One PB is equivalent to a thousand terabytes (TB) or 1 million GB, so 1 PB of storage could hold over 200,000 DVD-quality movies. That means the 20 PB of raw data is equivalent to well over 4 million DVDs.

“These data need to be processed, analyzed, and compared to simulated collisions in order to extract the physics information from the experiment,” said Jeff Porter, a Berkeley Lab nuclear physicist and the computing project lead for ALICE collaborators in the United States.

ALICE physicists seek to measure the properties of the QGP in detail, which will tell them a lot about the strong nuclear force and the behavior of matter governed by it. Among ALICE’s accomplishments to date are precise measurements of the “collective flow” of the QGP, showing that it is one of the most perfect, viscosity-free fluids allowed by the laws of physics. ALICE has also carried out detailed measurements of “jet quenching,” which occurs when a very energetic quark passes through the QGP and interacts in a process similar to an x-ray scan at a hospital. In the latter case, the scattered x-rays provide an image of the patient’s abdomen; in the case of jet quenching, the scattered quarks provide a sort of tomographic image of the QGP.

The LHC runs heavy-ion experiments with ALICE for four weeks each year. The huge amount of data produced in that period — around 8 GB stored per second — is made available to ALICE researchers via the Worldwide LHC Computing Grid.

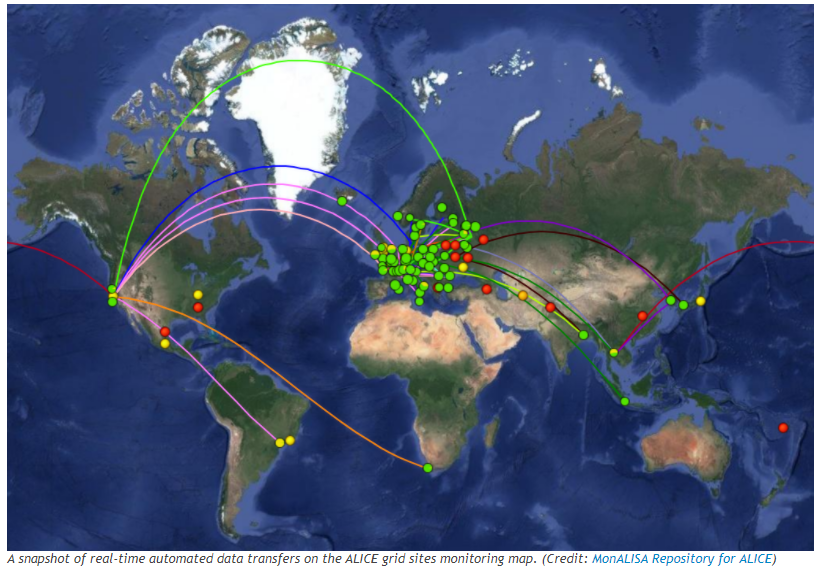

About 80 computing facilities at academic and research institutions around the world make up the ALICE Grid. The distributed infrastructure allows multiple copies of data to be kept at different sites, with no single point of failure. Using SCG and National Energy Research Scientific Computing (NERSC) resources, Berkeley Lab stores 1.5 PB of the total 63 PB of data in the ALICE file system distributed around the world.

Over half of the Grid’s computing power goes to simulating the heavy-ion collisions ALICE detects. “There’s an extensive program to understand the performance of the experiment by doing simulated experiments,” said Porter. “This way we understand what our detector response should be and what our efficiencies are.”

Any participating researcher can request analysis jobs of both simulated and experimental collision data, by accessing a virtual centralized database that knows what data are stored where. When a researcher submits a job to the central service, the job is rerouted to run locally at whichever site holds the relevant data. This task queue system eliminates the need for individual sites to figure out which remote site has the information they need.

“In order to do efficient processing of the data, you don’t want to be pulling the data around the network. What you do is you send the data out, park it somewhere where you know where it is and have computing that’s connected to that storage,” explained Porter. “It’s such a big operation, and almost everything is automated. There are algorithms that say, ‘I’m reading some data. I’m producing some other data. I store a copy locally, but I also want to store it somewhere else on the Grid.’”

That data started flowing in when systems at the Lab joined the Grid. “We’ve seen as much as 58 TB transferred in one day to the Berkeley Lab site as we were ramping up production,” said SCG storage architect John White.

Porter works with the SCG team to ensure its computing system is configured correctly to handle jobs and the storage system is operational. “The team is very responsive, and now they’re on the ALICE growth plan,” he said. “We expect to add a significant amount of resources in the next year.”

The size of the Grid grows by around 20 percent annually, usually by adding more computing resources at individual sites. Over the past six years, the total number of jobs processed at any given time has grown from 30,000 to a current peak of 130,000. That number is expected to grow as the demand for scientific computing and storage continues to outstrip available resources.

The Grid runs around-the-clock every day of the year, with the maintenance and management of the system distributed to the sites. “It is an extremely useful system that everyone is using all the time,” said Porter. “The fact that it’s worldwide means you’re not tied to any clock-time. There are physicists in China who are submitting jobs at a different time from the physicists in Europe and the U.S.”