ScienceIT Updates

July 2023

By Wei Feinstein, Karen Fernsler, Jordan Jung

Contents

- About the Lawrencium Supercomputer

- We are Excited to Announce:

- What’s the Cost of Using Lawrencium?

- MyLRC Portal is in Service

- How to Access Lawrencium via Open OnDemand

- Globus for Data Transfers Between Institutions and Cloud Services

- Are You Familiar with Savio at UC Berkeley?

- Coming Next: Science Project Storage Service (SPSS v2)

About the Lawrencium Supercomputer

Lawrencium is a shared computational Linux cluster with over 1,300 compute nodes (~ 30,000 computational cores) that is made available to all Berkeley Lab researchers and their collaborators.

Providing computing resources and services to allow scientists to focus on their research is the overarching goal behind Lawrencium.

The system consists of a large institutional investment of shared nodes along with PI-contributed Condo nodes that are interconnected with a low-latency InfiniBand network, well-suited for a wide diversity of applications. Lawrencium has a 3.5 petabytes of high performance Lustre parallel scratch file system storage as well as a shared home filesystem structure that can be accessed through any of the other computational resources managed by HPCS. Lawrencium operates on an “Evergreen ” model where new hardware is being added all the time so it also includes other generation CPUs and GPUs as well as large memory and AMD nodes to facilitate diversified computational workflows or smaller budgets.

We are Excited to Announce:

Free Computing! PI Compute Allowance to be Increased

Eligible Lab PIs can apply for a free allocation on Lawrencium under a program called the “PI Computing Allowance (PCA),” which can be renewed on an annual basis. Starting October 1, 2023, the PCA allocation will be increased from 300K Service Units (SUs) to 500K SUs to meet the growing needs of each researcher. As before, researcher collaborators can pool their allocations together for project needs.

Lawrencium LR7 Partition is Now in Production

One hundred Dell PowerEdge C6520 direct-liquid cooled servers, each of which is equipped with two Intel Icelake 6330 28-core processors, have been installed as the new Lawrencium LR7 partition. Both 256GB and 512GB node configurations are available and the new processor architecture adds two more memory channels to greatly increase the performance for memory-bound applications. To facilitate effective use of these new 56-core nodes, we have switched from a node-based scheduling configuration to a core-based model to better fill the node although users can still specify using the entire node if needed. As with the other Lawrencium partitions, users needing dedicated compute time can purchase identical nodes as part of our Condo Cluster program.

18 New Nvidia A40 GPU Nodes are the Newest Addition to the ES1 GPU Partition

Each new node consists of 4 Nvidia A40 GPU cards attached to an AMD EPYC 7713 64-Core CPU processor with 512 GB RAM. These powerful A40 nodes, along with software packages such as PyTorch and TensorFlow made available on Lawrencium, will help meet the fast growing demand for AI/ML research.

LRC Lustre Filesystem Performance Boost

By the time you read this article, we will have installed a new DDN 18K controller couplet which will double the peak large streaming write performance on the Lawrencium Scratch filesystem from 80GB/s to 160GB/s. In addition, we also added more NVMe storage to the Flash tier in order to accelerate small-file I/O. LRC Condo Storage users will also benefit from both of these changes.

What’s the Cost of Using Lawrencium?

The Lab has invested significantly in developing the Lawrencium cluster as a Condo Cluster program providing optimal HPC services with minimal charges to staff researchers. The use of Lawrencium is offered on a three-tier cost structure ranging from free to low as follows:

- Free Option: PI Compute Allowance (PCA) – Provides up to 500K Service Units (SUs) of free compute time per fiscal year to each qualified Berkeley Lab PI. Users have access to the entire Lawrencium computing resources.

- Pay As You Go Option: On-Demand (recharge) – Provides additional computing resources for less than $0.01 per SU, even on the latest LR7 hardware, with access to all the computing resources on the cluster.

- Condo owner Option: Condo projects – PIs can buy into the cluster and purchase compute nodes to exchange higher-priority access to the number of nodes they purchase in the partition and low-priority access to all the partitions on Lawrencium.

For Lawrencium documentation, including more information on storage capabilities, visit Computing on Lawrencium.

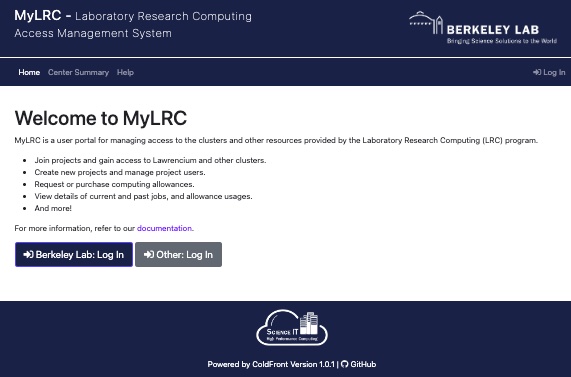

MyLRC Portal is in Service

MyLRC service is a newly launched web portal to automate user and project accounts management and status notifications. The portal uses CILogon, an Integrated Identity and Access Management, for user authentication. Lab PIs and researchers can submit and monitor account and project-related requests on the portal, manage group access by adding or removing users from projects, check their PCA’s SU balance, view details on service units used by individual project members, and check job status.

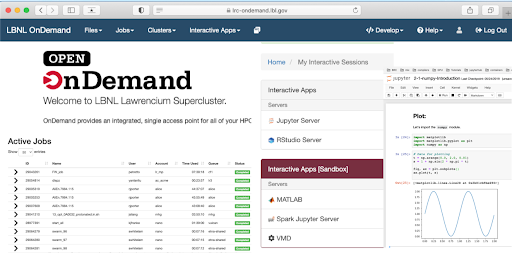

How to Access Lawrencium via Open OnDemand

Open OnDemand is a web interface that can be run on Windows, Mac, and Linux operating systems to access Lawrencium compute nodes and file systems all through a web browser. To launch Jupyter notebooks, conduct data analysis via RStudio, program in MatLab or VS Code, or fire up visualization applications, such as VMD, or Paraview, all you need is to start Open OnDemand service at lrc-ondemand.lbl.gov.

Globus for Data Transfers Between Institutions and Cloud Services

Do you need to transfer data to/from an AWS bucket, Google Cloud Platform or Google Drive account to your computer? Do you have large datasets to transfer to existing filesystems on various hosts? Globus may be the answer you are seeking! It features a straightforward user interface that allows users to access file systems hosting their data and with a few clicks – transfer the data from one computer or cloud storage to another. Once the transfer is initiated and as long as both endpoints in use are available, the user is free to get on with life – Globus does all the work unattended and can notify users when their transfers are complete. To access the Globus UI, use a browser to navigate to globus.lbl.gov and authenticate using your Lab credentials.

Globus also supports “connectors” which allow transfers of data from a computer to AWS buckets and Google Cloud Platform. The Lab now features the following connectors:

- For AWS buckets, browse the available collections in the Globus UI using the left hand side menu item, “Collections” and look for, “LBNL AWS S3 Collection” (UUID 9c6d5242-306a-4997-a69f-e79345086d68),

- For Google Cloud Platform, browse for “LBNL Google Cloud Storage Collection” (UUID 54047297-0b17-4dd9-ba50-ba1dc2063468).

- For Google Drive, browse for “LBNL Gdrive Access” (UUID 37286b85-fa2d-41bd-8110-f3ed7df32d62)

- For Lawrencium users, you can access your cluster data from either the scratch file system or your home directory by browsing for the lbnl#lrc (UUID 5791d5ee-c85a-4753-91f0-502a80d050d7) collection.

To facilitate browsing, please be sure the “Used Recently” option is not selected.

If you are interested in AWS buckets, please refer to this documentation: Getting an Amazon Web Services or Google Cloud Platform Account.

With Globus Plus you can use Globus Connect Personal between personal machines for transfers to any of the currently supported connectors and collections. To request membership in Globus Plus, log into globus.lbl.gov and navigate to settings using the left side menu bar. Select the Globus Plus tab and “LBNL IT Plus Sponsor” and follow the directions.

If you see a connector you would like us to support or need help in setting up a managed Globus endpoint, please send email to hpcshelp@lbl.gov.

Are You Familiar with Savio at UC Berkeley?

ScienceIT also manages the high-performance computing service for the UC Berkeley Research Computing program. This service, offering and supporting access to UC Berkeley’s Savio Institutional/Condo Cluster, is designed to provide a similar environment and level of service to campus researchers, given that many Lab scientists have dual appointments or collaborations with campus departments.

Coming Next: Science Project Storage Service (SPSS v2)

The next generation of SPSS for storing large datasets will be made available VERY soon. Please stay tuned!

Learn more about ScienceIT at scienceit.lbl.gov.